Method Panel

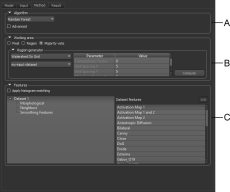

The Method panel includes the classifier engine and its parameters, the working area, which determines whether the classifier is trained on pixels only or the pixels within region features, as well as the features that will be extracted from the input dataset(s) to train the classifier.

Click the Method tab on the Machine Learning Segmentation dialog to open the Method panel, shown below.

Method panel

A. Algorithm B. Working area C. Features

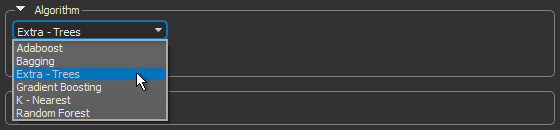

The available machine learning algorithms are accessible in the top section of the Method panel, as shown below.

Algorithm

Check the Advanced option to view the parameters associated with the current algorithm. You can modify the parameters, as well as save your modifications as the default settings. See Models List for information about the files saved with each classifier. The default algorithm parameters are saved for each available engine in the root directory C:\ProgramData\ORS\DragonflyXX\Data\OrsTrainer\Classifiers with a *.segParam extension.

You should note the following when you select an machine learning algorithm:

- Each algorithm will react differently to the same inputs.

- Trying different algorithms will most likely highlight the best choice for a given dataset.

- Changes you make to an algorithm parameters are saved automatically with the model. You can also save your changes as the default settings.

- The inputs to the algorithm will have a big influence on the resulting classification, especially the number of estimators. Too few estimators won’t train the model enough, but too many may over train it. Both conditions may result in poor classification.

|

|

Description |

|---|---|

|

K-Nearest* |

Implements learning based on the k nearest neighbors of each query point, where k is the specified integer value. The optimal choice of the value k is highly data-dependent. In general a larger k suppresses the effects of noise, but makes the classification boundaries less distinct. |

|

Ensemble Methods** |

The goal of ensemble methods is to combine the predictions of several base estimators built with a given learning algorithm in order to improve generalizability and robustness over a single estimator. Ref: http://scikit-learn.org/stable/modules/ensemble.html AdaBoost… An AdaBoost classifier is a meta-estimator that begins by fitting a classifier on the original dataset and then fits additional copies of the classifier on the same dataset but where the weights of incorrectly classified instances are adjusted such that subsequent classifiers focus more on difficult cases. Ref: http://scikit-learn.org/stable/modules/generated/sklearn.ensemble.AdaBoostClassifier.html Bagging… A Bagging classifier is an ensemble meta-estimator that fits base classifiers each on random subsets of the original dataset and then aggregate their individual predictions (either by voting or by averaging) to form a final prediction. Such a meta-estimator can typically be used as a way to reduce the variance of a black-box estimator (e.g., a decision tree), by introducing randomization into its construction procedure and then making an ensemble out of it. Ref: http://scikit-learn.org/stable/modules/generated/sklearn.ensemble.BaggingClassifier.html Extra Trees… This classifier implements a meta estimator that fits a number of randomized decision trees (also known as extra-trees) on various sub-samples of the dataset and uses averaging to improve the predictive accuracy and control over-fitting. Ref: http://scikit-learn.org/stable/modules/generated/sklearn.ensemble.ExtraTreesClassifier.html Gradient Boosting… Builds an additive model in a forward stage-wise fashion; it allows for the optimization of arbitrary differentiable loss functions. In each stage n_classes_ regression trees are fit on the negative gradient of the binomial or multinomial deviance loss function. Binary classification is a special case where only a single regression tree is induced. Ref: http://scikit-learn.org/stable/modules/generated/sklearn.ensemble.GradientBoostingClassifier.html Random Forest… A Random Forest is a meta estimator that fits a number of decision tree classifiers on various sub-samples of the dataset and uses averaging to improve the predictive accuracy and control over-fitting. The sub-sample size is always the same as the original input sample size but the samples are drawn with replacement if Ref: http://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestClassifier.html |

* Neighbors-based classification is a type of instance-based learning or non-generalizing learning that does not attempt to construct a general internal model, but simply stores instances of the training data. Classification is computed from a simple majority vote of the nearest neighbors of each point: a query point is assigned the data class which has the most representatives within the nearest neighbors of the point.

** Two families of ensemble methods are usually distinguished — Averaging and Boosting. In Averaging methods, such as Bagging and Random Forest, the driving principle is to build several estimators independently and then to average their predictions. On average, the combined estimator is usually better than any of the single base estimator, because its variance is reduced. By contrast, in Boosting methods like AdaBoost and Gradient Boosting, base estimators are built sequentially and one tries to reduce the bias of the combined estimator. The motivation is to combine several weak models to produce a powerful ensemble.

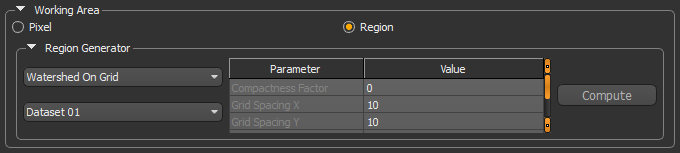

The working area — Pixel, Region, or Majority Vote — determines whether the model is trained based on pixels only or the pixels within region features.

In pixel-based training, the dataset features extracted are the intensity value(s) of the pixel directly. In this case, the feature vector has the same length as the number of pixels in the image (see Dataset Features).

In region-based training, the region features is the information extracted from the intensities of the pixels in a given region. In this case, the feature vector has the same length as the number of regions defined for the image (see Region Features).

Region working area

Working on regions increases the statistics of the feature vector provided to the classifier so it is less likely to be sensitive to noise. However, the way the regions are defined is crucial because they must group together pixels corresponding to the same class. If a region overlaps pixels of different classes, it can confuse the classifier. Whenever possible, you should create segmentation labels after the region has been generated (see Generating Regions).

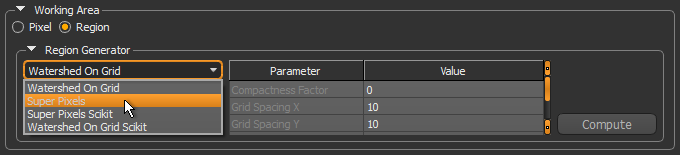

The different algorithms that can be used to generate regions from the selected dataset are described below. In future releases of Dragonfly you will be able to provide your own region generators.

|

|

Description |

|---|---|

|

Watershed on Grid |

This algorithm applies a Gaussian filter, and two sequential Watersheds. The first Watershed is applied using the Sobel of the provided dataset as the landscape and a 2D grid as the seeds. The 2D grid is defined by a given X and Y spacing. If a seed point is on a maximum of the Sobel, it is moved in the direction of the gradient. The second Watershed is applied still using the Sobel of the provided dataset as the landscape, but using the output of the first Watershed as the seeds for the regions bigger than a given number of pixels. The smaller regions with irrelevant statistics are therefore eliminated. |

|

Super Pixels |

The algorithm applies a Gaussian filter and simple linear iterative clustering (SLIC). |

|

Super Pixels Scikit |

This region generator is implemented from the scikit-image collection of algorithms for image processing. Ref: http://scikit-image.org/docs/dev/api/skimage.segmentation.html#skimage.segmentation.slic |

|

Watershed on Grid Scikit |

This region generator is implemented from the scikit-image collection of algorithms for image processing. Ref: http://scikit-image.org/docs/dev/api/skimage.morphology.html#skimage.morphology.watershed |

- Choose Region as a working area on the Features panel.

- Choose a region generator in the Region Generator drop-down menu.

Refer to the table Region generators for information about the available region generators and the parameters associated with each generator.

- Modify the default settings of the selected region generator, if required.

- Select the dataset that will used to compute the region in the Dataset drop-down menu.

- Click the Compute button to preview the generated regions.

- Evaluate the result, recommended.

- If required, modify the settings of the selected region generator or try a different generator.

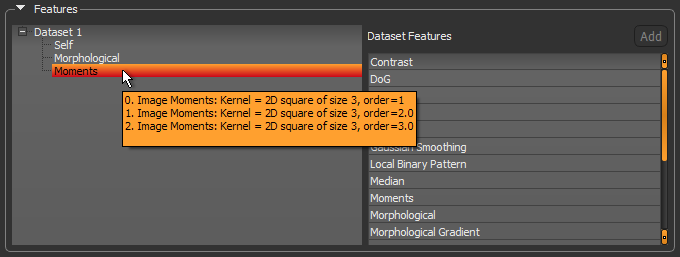

Datasets added to the Input Panel are automatically added to the features tree since they are the base to which dataset or region features presets can be added. Each feature preset added to the tree gives more information to the trainer. After training, the relevance of each feature preset, on a scale from 0 to 1, is displayed in the tree to help you judge whether the feature preset provides useful information to the classifier or not. Features determined to be ineffective can be removed from the features tree.

Whenever a feature preset is added to the features tree of the current classifier, a copy is made and saved with the model. You can then edit the copy without editing the default features preset. Dataset features presets must be added to a dataset, while region features must be added to a dataset feature. It is possible to preview dataset features and region features during the training workflow and prior to segmentation.

The dataset features presets are a stack of filters that are applied to a dataset to extract information to train a classifier.

The default dataset feature presets are saved in the directory C:\ProgramData\ORS\Dragonflyxx\Data\OrsTrainer\FeaturePresets. Copied presets are saved with the classifier in the model directory.

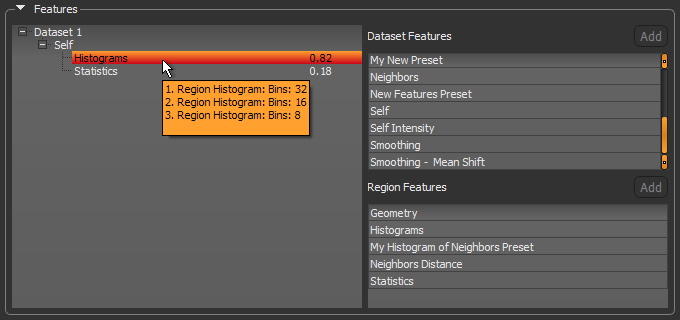

As shown below, a brief description of each filter in the stack of the currently selected preset is displayed on hover.

Dataset features

When the classifier works on regions and not directly on the pixel level, information is extracted from regions to build the feature vector. The features extracted from the region are different metrics used to represent the region itself. For example, the histograms of the intensities of the pixels in the given region, or to compare a given region and its surrounding, as is done with the Earth Movers Distance metric. Other metrics can be added as required.

Default region feature presets are saved in the directory C:\ProgramData\ORS\Dragonflyxx\Data\OrsTrainer\RegionFeaturePresets. Copied presets are saved with the classifier in the model directory.

As shown below, a brief description of each filter in the stack of the currently selected preset is displayed on hover.

Region features